Proprio

Real-time wearable data pipelineProprio was a full-stack real-time data platform: Swift watch app → AWS Lambda ingestion → MongoDB → ML classification → web dashboards. $100K STTR funded, shown to have 20% better accuracy than state-of-the-art approaches.

End-to-end ownership of a streaming pipeline that processed continuous IMU data from Apple Watches, ran patient-specific ML classifiers, and served results to clinician-facing dashboards.

A sensor-to-dashboard pipeline with real-time streaming, serverless ingestion, document storage, ML inference, and multi-tenant visualization.

Engineering Challenge

The problem: continuous sensor data from wearables, but no infrastructure to make it useful.

- High-frequency capture — IMU data at 30Hz from Apple Watch

- Two-hop delivery — watch → iPhone (local), iPhone → AWS (on WiFi)

- Unreliable connectivity — gaps in uploads, packet loss, out-of-order delivery

- Per-user models — population models fail; each user needs calibration

- Multi-tenant access — patients, clinicians, and researchers need different views

The solution: a resilient upload pipeline with local buffering on iPhone, deduplication and gap handling on ingest, and a ML pipeline that trains per-user classifiers.

System Architecture

Serverless Lambda Pipeline Handling 30Hz IMU Streams

- Serverless ingestion — Lambda functions handle bursty uploads from iPhones

- Deduplication — handle retries and out-of-order delivery gracefully

- Gap detection — identify missing time windows, request re-upload if available

- Batched writes — aggregate samples before storage

MongoDB with Time-Series Indexing for Multi-Tenant Access

- Document model — flexible schema for heterogeneous sensor data

- Time-series indexing — optimized for range queries on timestamped data

- Per-user partitioning — data isolation for multi-tenant access

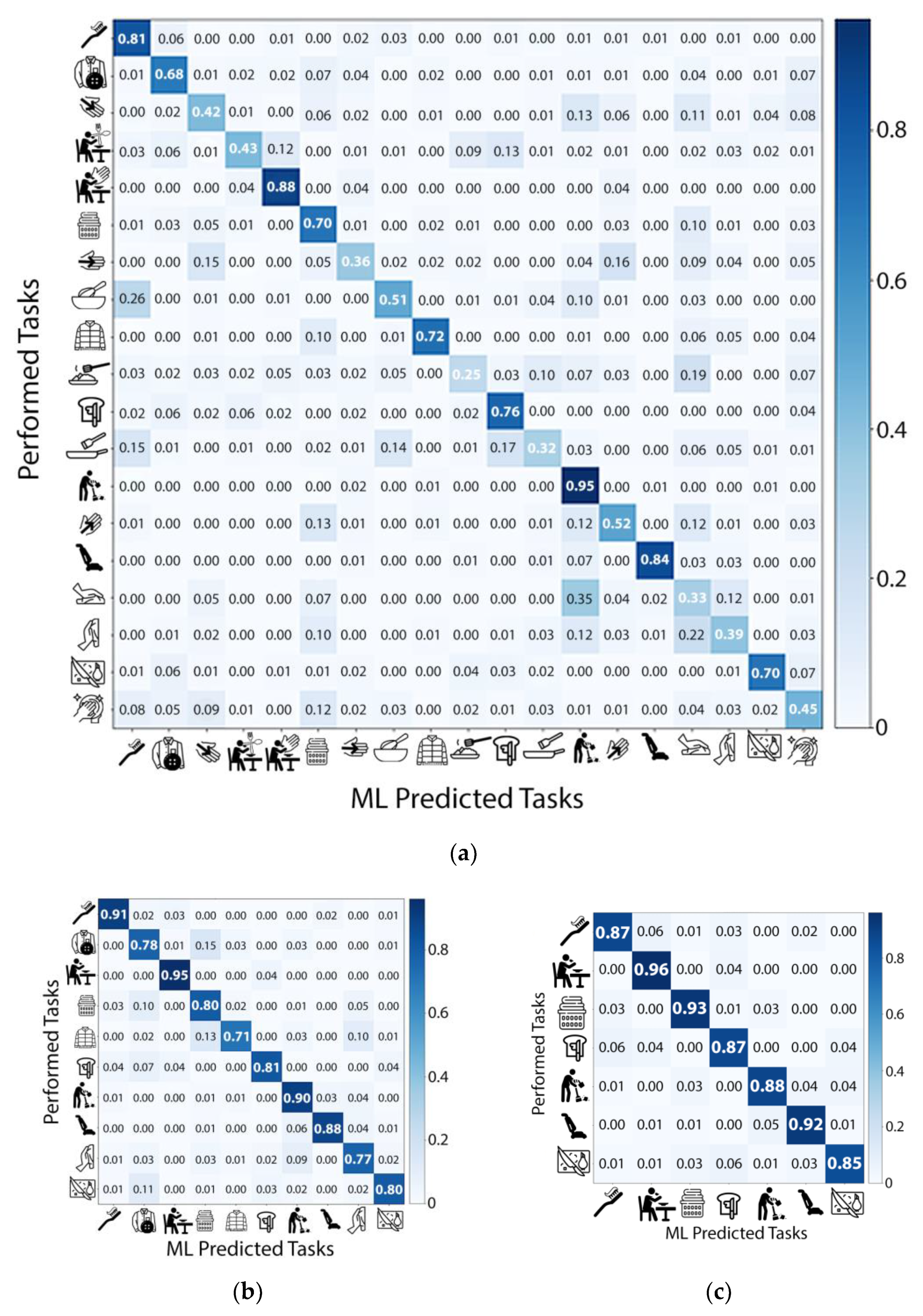

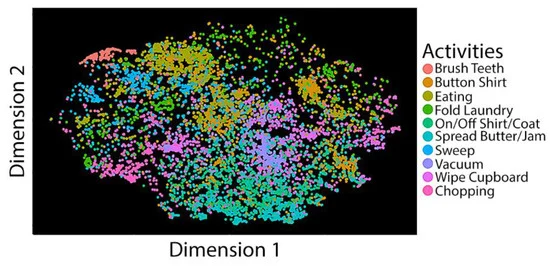

Per-User Classifiers with 20% Better Accuracy

- Feature extraction — time-domain and frequency-domain features from raw IMU

- Per-user training — calibration sessions generate labeled data for individual classifiers

- Model registry — versioned classifiers deployed per user

Watch → iPhone → Cloud Full-Stack Pipeline

- Swift watch app — background 30Hz IMU capture, streams to paired iPhone

- Swift iPhone app — local buffering, WiFi-triggered uploads to AWS

- C# data tools — MongoDB queries, data structuring for analysis

- Web dashboards — role-based views for patients, clinicians, researchers

My Role

As Co-Founder & Technical Lead, I owned the full technical stack:

- Designed and implemented the end-to-end data pipeline from watch to dashboard

- Built the serverless ingestion layer and MongoDB data model

- Co-invented the patient-specific classification method (published, peer-reviewed)

- Wrote the STTR grant that secured $100K funding

- Ran 50+ customer discovery interviews and clinical validation studies

The company pivoted when we hit the R01 funding barrier. The experience shaped how I think about building data-intensive platforms—real-time pipelines, per-user state, multi-tenant access patterns.

Results

- 20% better accuracy than published state-of-the-art (per-user models vs population models)

- $100K STTR grant — wrote and won federal funding for R&D

- Peer-reviewed publication — validated methodology in production

- NSF I-Corps + BioGenerator — customer discovery and startup accelerator

How does this connect? Proprio showed me this pattern in a different domain: sensor architecture determining what behaviors we could recognize. See the throughline →