Nathan Baune

Platform & Systems Engineer

I build production tools, ML systems, and complex software platforms — from real-time EEG classification and neuroimaging pipelines to a GPU-accelerated simulation engine with its own visual design environment, multi-language CLI, and LLM-powered natural language interface.

The thread connecting my work: studying how information processing architectures produce emergent behavior—from neural circuits to ML models to simulated ecosystems.

For the past decade I've built production systems in neuroscience and health tech:

- Research tools and systems shipped to 9 labs across 3 institutions.

- 2 startups: co-founder (PlatformSTL LLC), founder & principal engineer (Gothic Grandma LLC).

- MR.Flow: GUI orchestrating neuroimaging pipelines with parallel execution optimized to user hardware. Setup and deployment reduced from weeks to ~45 minutes.

- Epoche: ML workbench for neurophysiology—feature extraction, grid search, ensemble optimization, and interpretability tools for mechanistic hypothesis generation and model/ensemble deployment.

- Real-time ML classification of brain state for closed-loop experiment control, therapy onset, and neurostimulation. <40ms end-to-end latency.

- Proprio (PlatformSTL, St. Louis): STTR-funded wearable activity classification platform (hardware → cloud → ML pipeline) for monitoring patient care and long-term outcomes.

- Countless rapid-turnaround prototypes and hours of engineering, scientific, and statistical consulting.

Technologies

Current Interests

I'm actively exploring the intersection of AI and complex systems:

- Agentic AI architectures — how multi-model orchestration, tool use, and planning emerge in LLM-based systems

- Interpretability and alignment — making AI behavior legible and predictable, from SHAP/LIME to mechanistic interpretability

- Simulation as testbed — using MUSE's transparent, deterministic systems to study how architectural choices shape emergent behavior

- Human-AI collaboration — designing interfaces where AI augments rather than replaces human judgment (see: BABEL, Epoche)

Read more: How this question connects everything I've built →

Projects

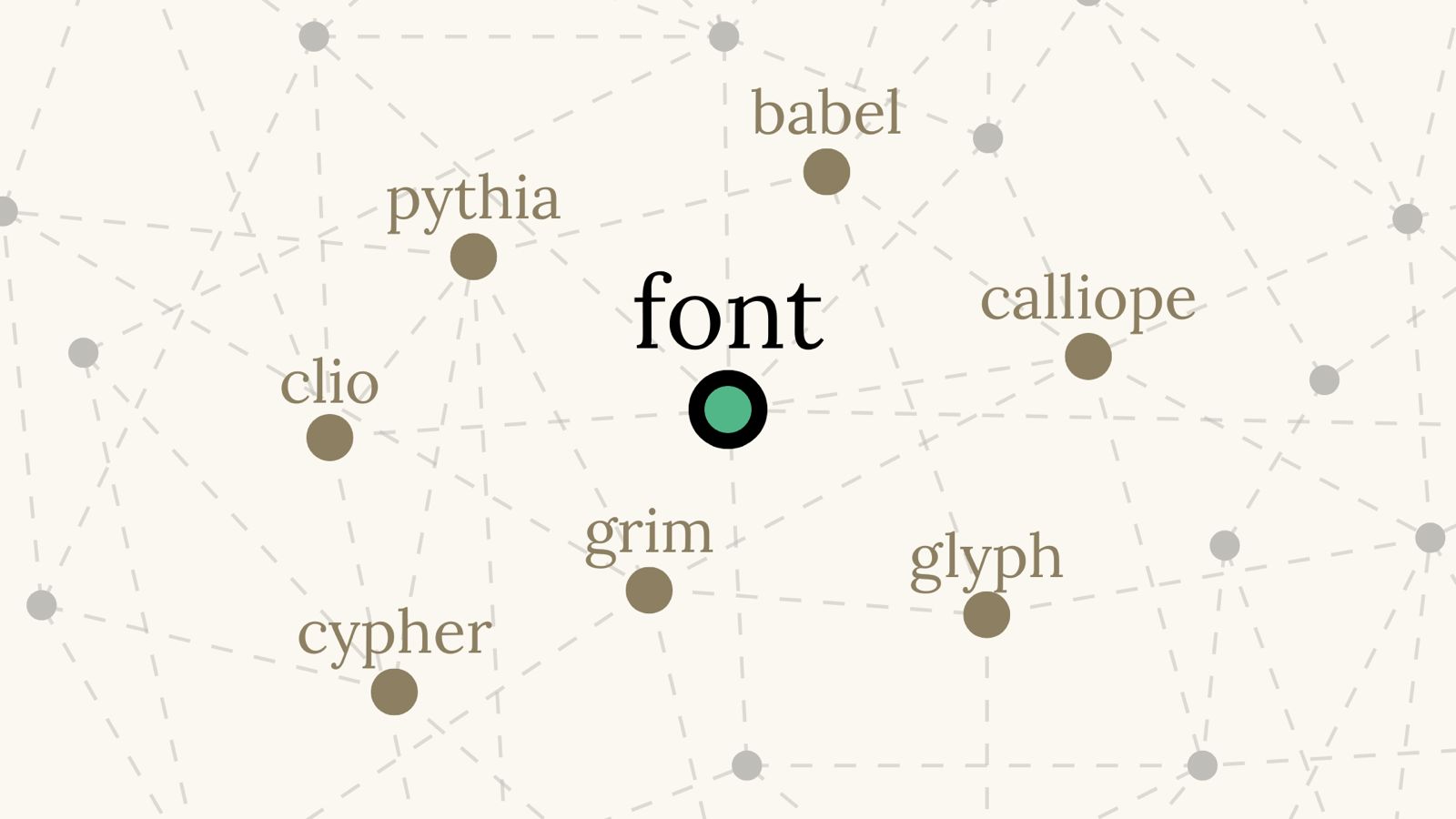

MUSE Ecosystem

2025–PresentEmergent world simulation engine (100k+ entities) with biological/psychological systems, plus the tooling ecosystem to design, debug, and experience it. Visual system designs compile directly to GPU kernels—no hand-written simulation code.

Explore the full ecosystem → to get oriented, or click on a tool below to fast-track to one that catches your eye.

FONT

Simulation Engine

Biological simulation engine scaling to 100K+ entities. GPU-accelerated and deterministic.

FONT

Simulation Engine

Biological simulation engine scaling to 100K+ entities. GPU-accelerated and deterministic.

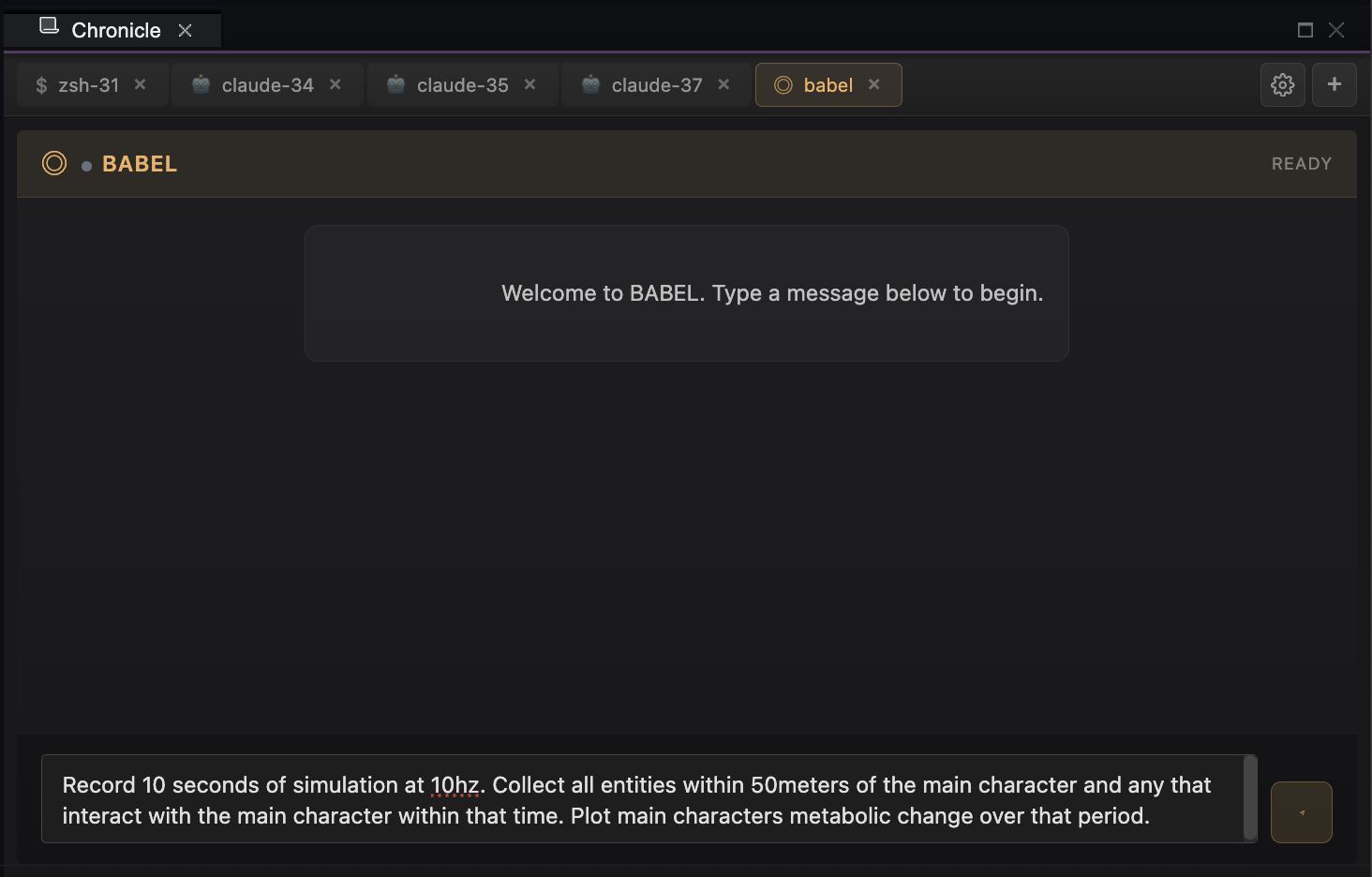

BABEL

Semantic Interface

Natural language parsing with vector-based command matching. Translates intent across all tools.

GLYPH

Interactive E-Reader

Interactive fiction interface where you guide, not control. Type or speak to influence the world.

CALLIOPE

Launcher & Library

Living Worlds marketplace and library manager. Downloads worlds, manages updates, launches GLYPH.

CYPHER

Entity Systems Designer & Profiler

Visual system designer and profiler. Design → schema → GPU kernels.

CLIO

AI-Augmented Dev Environment

AI-augmented dev environment with codebase analysis, multi-terminal, and session tracking.

BABEL

Semantic Interface

Natural language parsing with vector-based command matching. Translates intent across all tools.

GLYPH

Interactive E-Reader

Interactive fiction interface where you guide, not control. Type or speak to influence the world.

CALLIOPE

Launcher & Library

Living Worlds marketplace and library manager. Downloads worlds, manages updates, launches GLYPH.

CYPHER

Entity Systems Designer & Profiler

Visual system designer and profiler. Design → schema → GPU kernels.

CLIO

AI-Augmented Dev Environment

AI-augmented dev environment with codebase analysis, multi-terminal, and session tracking.

GG.Flow

2026–Present · AlphaVisual pipeline platform for multimodal scientific research. 148 nodes across 9 domains — EEG, MRI, ML, stats, viz — with language-agnostic SDK, content-addressable caching, and auto-generation from Python, R, CLI, and YAML.

MR.Flow

2024–PresentPipeline orchestration system for MRI processing. Worker coordination, task queuing, dependency management, and failure recovery for multi-stage analysis workflows across 150+ datasets.

Epoche

2023–PresentML workbench for neurophysiology. Feature extraction, grid search, ensemble optimization, cross-model interpretability, and publication export—with serialization for deployment to real-time C++ execution backends.

BIDS-SQL

2026–PresentDatabase schema and query infrastructure for BIDS neuroimaging datasets. SQLite/PostgreSQL schemas with Python library for import, export, and processing provenance tracking. Powers MR.Flow's dataset management.

Proprio

2017–2021Real-time wearable data platform for stroke rehabilitation. Apple Watch IMU streaming → AWS Lambda ingestion → MongoDB storage → patient-specific ML classifiers → clinician dashboards. $100K NIH STTR funded, peer-reviewed publication.

Research Portfolio

2015–2025A decade of research tools and systems across 9 labs: real-time closed-loop ML, robotic manipulandum control, VR experimental platforms, multi-device hardware synchronization, and data pipelines.

Precision Neural Engineering Lab, Emory University Medical

Neural Plasticity Research Lab, Emory University Medical

Neuromechanics Lab, Emory University Medical

Recent Writing

What I Learned from Years Working with "Black Boxes"

Build for inspection. Make state observable. Use AI for what it excels at.

Why ML Interpretability Needs Storytellers

Epoche: making ML legible to domain experts, not just engineers.

The Divide Between Deterministic Simulation & Black-Box AI

Why MUSE uses LLMs for translation, not generation.

From Research Pipelines to Production

Lessons learned from years operating across different engineering worlds.