Gothic Grandma LLC

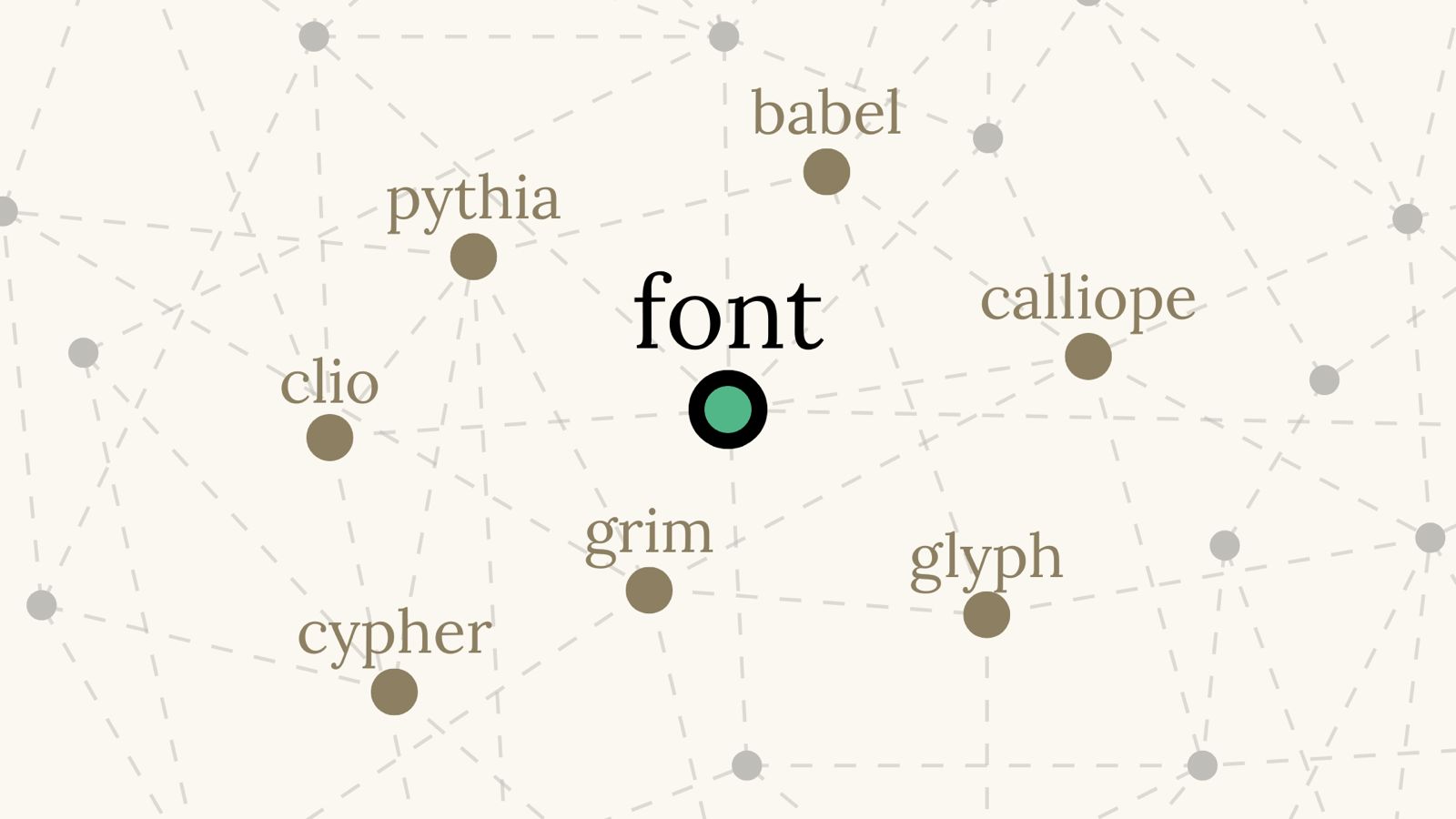

MUSE Ecosystem

Emergent World Simulation PlatformMUSE is an emergent world simulation platform—scales to 100k+ entities with biological needs, psychological states, and social relationships running on deterministic, inspectable systems. The primary application is interactive storytelling: narratives emerge from simulation state rather than scripts, with LLMs translating causality into natural language.

The platform consists of 12 tools spanning simulation, development, and consumer interfaces. Each tool solves a specific problem in the creation → execution → experience pipeline. All tools share the same backend infrastructure with role-based access.

LLMs are used strictly for input/output translation and narration. All simulation logic, state transitions, and causal systems are deterministic and fully inspectable.

Tool Ecosystem

Developer and Researcher Tooling

CYPHER Alpha

Entity Systems Designer & Profiler

Internal tool for designing Universal Entity systems via Tessera (visual constructor) and profiling simulation performance. Visual design → schema → GPU kernels.

CLIO Beta

AI-Augmented Dev Environment

Developer OS with Sibyl codebase intelligence (static analysis → database → architecture visualization), multi-terminal, AI pair programming, and session tracking.

Consumer Layer

GLYPH Alpha

Interactive E-Reader

Interactive fiction interface where you guide, not control. Type or speak to influence what characters notice, remember, and attempt.

CALLIOPE Alpha

Launcher & Library

Living Worlds marketplace and library manager. Downloads worlds, manages updates, launches GLYPH.

Research & Authoring (Scoped)

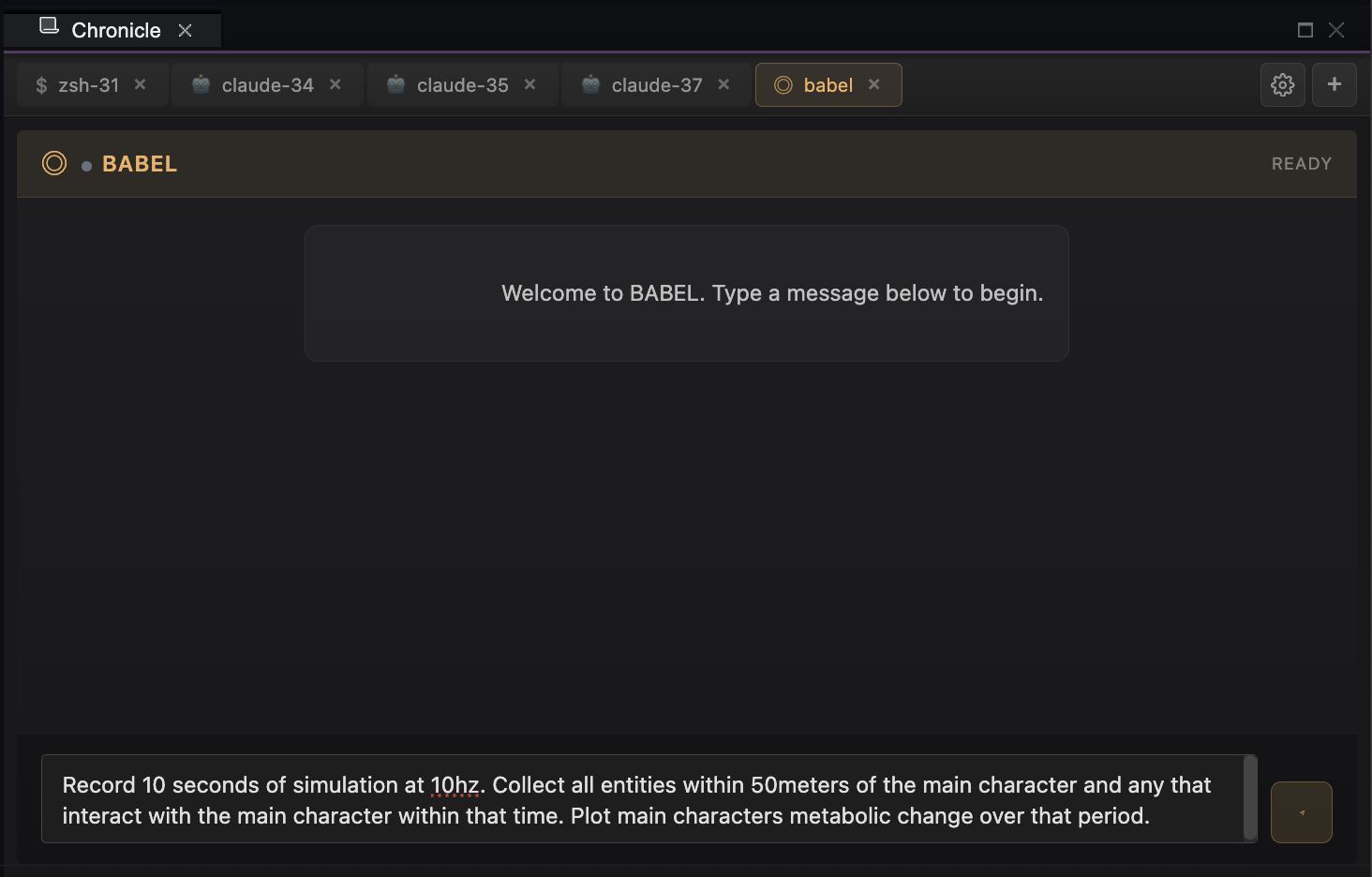

PYTHIA Scoped

Research Interface

Scientific instrumentation surface. Set parameters, run experiments on FONT, export results to CSV/MATLAB/database.

GRIM Scoped

Content Creation

AI-assisted world authoring. Creators think in semantics and description—GRIM quantifies input into simulation data.

Build Infrastructure

GESTALT Alpha

Kernel Generator

Schema → kernel compilation pipeline. Transforms visual system designs into optimized GPU binaries (CUDA/Metal/Vulkan/CPU).

TESSERA Alpha

Visual Constructor

React Flow node graph editor for building FONT kernel batches. Embedded as iframe panel within CYPHER workbench.

SIBYL Alpha

Architecture Analyzer

Python-based codebase analysis parsing C++, Go, Python, TypeScript, Dart, and SQL. Dependency mapping, API extraction, health metrics. Feeds into Architecture Explorer panel.

PYNAX Alpha

Registry Builder

Scans C++ and Go APIs to populate the master command registry. Extracts endpoints, permissions, and contract signatures needed for runtime command dispatch.

Platform Architecture

Visual → Compiled Pipeline

Communication Layers

Core Architecture

What Makes MUSE Different

Deterministic Simulation, Not Generation

- Real causality — consequences emerge from biological and psychological systems, not scripts

- Persistent state — worlds remember everything through database-backed memory, no LLM context limits

- Inspectable logic — trace any behavior back to root cause through explicit data flow

- No hallucination risk — simulation truth is ground truth; LLMs only translate at boundaries

On-Demand Execution

- Burst computation on prompts — processes 100,000+ entities in milliseconds when user acts

- Efficient by design — idle when paused, no wasted cycles on continuous rendering

- Scales independently — simulation complexity grows with world richness, not interaction frequency

- Central state management — shared database backend, resource-aware scheduling

Visual Design → Compiled Code

- Design visually — build systems in CYPHER's Constructor panel using node graphs, no hand-written simulation code

- Automatic compilation — visual designs compile to optimized GPU/CPU kernels via GESTALT

- Database as source of truth — all system definitions live in the schema; code generates from it

- Full traceability — every behavior traces back to its visual definition

Unified Platform, Role-Based Access

- Role-based access — readers use GLYPH, engineers use CYPHER, researchers use PYTHIA

- Shared infrastructure — same Go backend, same database schemas, same panel system; tools differ by permissions and workflow, not implementation

- Real-time collaboration — multiple users can build the same systems simultaneously from different locations

- CLIO as operations hub — session tracking, terminal persistence, analysis scripting

What Problem MUSE Solves

Problem: Modern interactive fiction, educational software, and AI-driven storytelling systems are built on static scripts or probabilistic text generation. They can be immersive, but they do not simulate causality. Their characters do not truly perceive, decide, or change in response to integrated biological, psychological, and social forces.

Solution: MUSE enables embodied, scientifically grounded simulation where:

- Perception, decision-making, learning, and relationships arise from real models of biology and psychology

- Long-term change emerges from cause-and-effect, not narrative branching

- Users can probe systems like scientists—not just consume a story

- Worlds persist, evolve, and surprise without relying on LLM memory or hallucinated state

The result is a new medium: interactive worlds where people don't just read about perspectives—they inhabit them.

Who It's For

- Readers — experience Living Worlds through GLYPH, interacting via natural language

- Researchers — will run experiments and export data via PYTHIA without touching code (in development)

- Creative directors — will author worlds through GRIM using semantics and description (in development)

- System engineers — build the perceptual, behavioral, and physical systems that power everything via CYPHER and CLIO

How does this connect? MUSE is where I study architecture → processing → behavior most directly, with full control over system design. Read about the throughline →